Past talks

The United Racisms of America: Conceptualizing, Measuring and Understanding Anti-Black Human Rights Violations, 1860-2010

Christian Davenport

February 1, 2024

Abstract:

This event is part of the Hanes Walton, Jr. Lecture Series.

A great deal has been discussed about the different types and degrees of violence\discrimination directed against African Americans since they were brought to the United States. Unfortunately, the work on this topic has largely been fragmented. We thus have separate literatures on slavery, lynching, Jim Crow (old and new), police violence, disenfranchisement, incarceration, police violence and hate crimes. Despite suggestions that these are all manifestations of a singular underlying phenomenon, there is very little empirical effort directed toward testing such an idea. Drawn from a larger research project on the consequences of racial violence funded by the Research Council of Norway, the current talk explores whether and to what degree distinct forms of anti-Black human rights violations fit together in a coherent sense as well as how they vary across space and time. These data will not only shed much light on what was done to African Americans between the years 1860 and 2010 but it will also facilitate systematic evaluations of when things began to get worse and better, what was the relative intensity of violations, how long did bad/good periods last, what are the legacies/consequences of anti-Black human rights violations as well as where efforts should be made to address PTSD, reconciliation and/or reparations. This effort will also assist in the creation of a grand accounting of what has taken place by allowing individuals throughout the United States to provide their stories and archival material regarding what they experienced.

Equity and the ACA’s Impact on Indigenous Birth Givers

Danielle Gartner

January 29, 2024

Abstract:

Indigenous birth givers bear a disproportionate burden of poor pregnancy outcomes (e.g., preterm birth, hypertensive disorders, gestational diabetes mellitus, severe maternal morbidity, and maternal and infant mortality) that disrupt birth ceremonies and portend future health concerns experienced by children, mothers, families, and communities. Indigenous birth givers also often hold citizenship in sovereign Nations with whom the US has agreements of fiduciary responsibility. The Patient Protection and Affordable Care Act (ACA) is a complex set of policies that have demonstrated positive impact on perinatal health. Unfortunately, conversations to repeal the ACA are ongoing and often do not include the specific needs or contexts of American Indian and Alaska Native (AIAN) peoples. Because the ACA included several AIAN-specific provisions, there is opportunity for differential impact and ultimately, the potential to reduce health inequities between AIAN and white birth givers. In partnership with an advisory board of Indigenous health services and policy experts, this project combines qualitative and quantitative research analyzing national datasets and interviews with expert consultants to provide understanding of how to make federal health policy work equitably for Indigenous birth givers in the US.

Distinguishing between sex and gender: Implications for transgender- and intersex-inclusive data collection

Kate Duchowny (University of Michigan)

October 30, 2023

This event is part of the ISR Population Studies Center Brown Bag Series.

Abstract:

Despite recent calls to distinguish between sex and gender, these constructs are often assessed in isolation or are used interchangeably. In this talk, I will present data that quantifies the disagreement between chromosomal and self-reported sex and identifies potential reasons for discordance using data from the UK Biobank. My co-authors and I show that among approximately 200 individuals with sex discordance, 71% of discordances were explained by intersex traits or transgender identity. These findings imply that health and clinical researchers have a unique opportunity to advance the rigor of scientific research as well as the health and well-being of transgender, intersex, and nonbinary people, who have long been excluded from and overlooked in clinical and survey research.

Teaching Inclusive and Policy-Relevant Statistical Methods

Catie Hausmay (University of Michigan)

April 3, 2023

Abstract:

Professor Hausman will share examples of inclusive pedagogical approaches to teaching quantitative methods, based on her experiences teaching Statistics to master’s level students in the School of Public Policy. She’ll describe methods that can improve learning outcomes and student engagement, by recognizing a diverse array of learning styles and student backgrounds. She’ll also discuss how to promote critical thinking in quantitative classes, both to improve student comprehension and to acknowledge ethical considerations in the application of statistical methods.

Critical Quantitative Methodology: Advanced Measurement Modeling to Identify and Remediate Racial (and other forms of) Bias

Matt Diemer (University of Michigan)

February 20, 2023

Abstract:

The emerging Critical Quantitative (CQ) perspective is anchored by five guiding principles (i.e., foundation, goals, parity, subjectivity, and self-reflexivity) to mitigate racism and advance social justice. Within this broader methodological perspective, sound measurement is foundational to the quantitative enterprise. Despite the problematic history of measurement, it can be repurposed for critical and equitable ends. MIMIC (Multiple Indicator and MultIple Causes) models are a measurement strategy to simply and efficiently test whether a measure means the same thing and can be measured in the same way across groups (e.g., racial/ethnic and/or gender). This talk considers the affordances and limitations of MIMICs for critical quantitative methods, by detecting and mitigating racial, ethnic, gendered, and other forms of bias in items and in measures.

The Promise of Inclusivity in Biosocial Research – Lessons from Population-based Studies

Jessica Faul (Research Associate Professor, SRC, Institute for Social Research)

Colter Mitchell (Research Associate Professor, SRC, Institute for Social Research)

April 18, 2022.

Giving Rare Populations a Voice in Public Opinion Research: Pew Research Center’s Strategies for Surveying Muslim Americans, Jewish Americans, and Other Populations

Courtney Kennedy (Director of Survey Research at Pew Research Center)

April 6, 2022

Abstract:

A typical public opinion survey cannot provide reliable insights into the attitudes and experiences of relatively small and diverse religious groups, such as adults identifying as Jewish or Muslim. Not only are the sample sizes too small, but adults who speak languages such as Russian, Arabic, or Farsi (and not English) are excluded from interviewing. This presentation discusses how Pew Research Center has sought to address this research gap by fielding large, multilingual probability-based surveys of special populations. Examples include the Center’s 2017 Survey of Muslim Americans and the 2020 Survey of Jewish Americans. These studies present numerous challenges in sampling, recruitment, crafting appropriate questions, and weighting. The presentation will also discuss the Center’s methods for studying racial and ethnic populations with the goal of reporting on diversity within these populations, as opposed to treated them as monolithic groups.

How Invalid and Mischievous Survey Responses Bias Estimates of LGBQ-heterosexual Youth Risk Disparities

Joseph Cimpian (Associate Professor of Economics and Education Policy at NYU Steinhardt)

March 16, 2022

Abstract:

Survey respondents don’t always take surveys as seriously as researchers would like. Sometimes, they provide intentionally untrue, extreme responses. Other times, they skip items or fill in random patterns. We might be tempted to think this just introduces some random error into the estimates, but these responses can have undue effects on estimates of the wellbeing and risk of minoritized populations, such as racially and sexually minoritized youth. Over the past decade, and with a focus on youth who identify as lesbian, gay, bisexual, or questioning (LGBQ), a variety of data-validity screening techniques have been employed in attempts to scrub datasets of “mischievous responders,” youths who systematically provide extreme and untrue responses to outcome items and who tend to falsely report being LGBQ. In this talk, I discuss how mischievous responders—and invalid responses, more generally—can perpetuate narratives of heightened risk, rather than those of greater resilience in the face of obstacles, for LGBQ youth. The talk will review several recent and ongoing studies using pre-registration and replication to test how invalid data affect LGBQ-heterosexual disparities on a wide range of outcomes. Key findings include: (1) potentially invalid responders inflate some (but not all) LGBQ–heterosexual disparities; (2) this is true more among boys than girls; (3) low-incidence outcomes (e.g., heroin use) are particularly susceptible to bias; and (4) the method for detection and mitigation affects the estimates. Yet, these methods do not solve all data validity concerns, and their limitations are discussed. While the empirical focus of this talk is on LGBQ youth, the issues and methods discussed are relevant to research on other minoritized groups and youth generally, and speak to survey development, methodology, and the robustness and transparency of research.

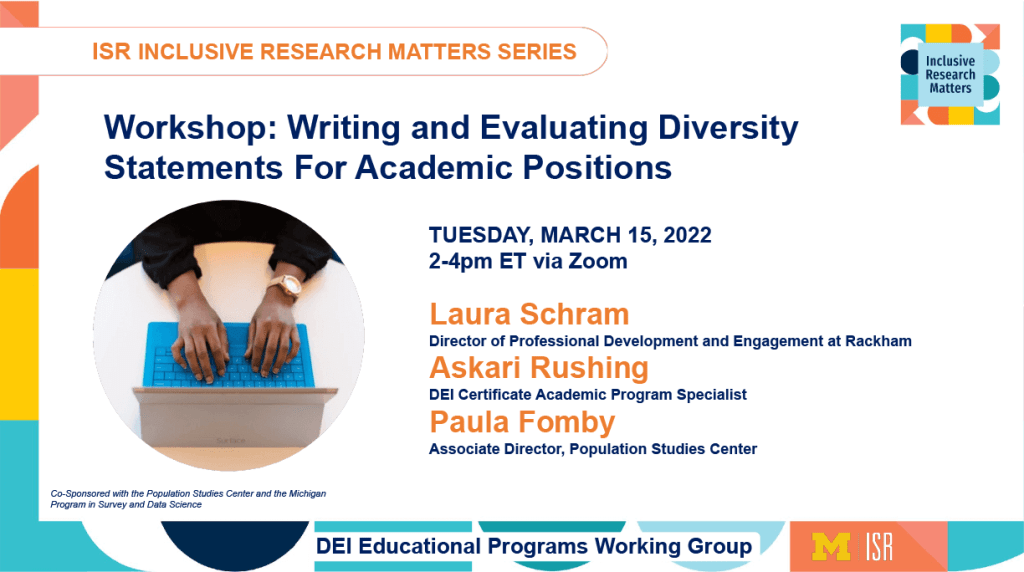

Workshop: Writing and Evaluating Diversity Statements For Academic Positions

March 15, 2022

Workshop description:

Increasingly, hiring committees are interested in how prospective faculty job and postdoctoral fellowship candidates will contribute to diversity, equity, and inclusion. As a result, many academic employers have begun to request a “diversity statement” as part of the faculty job or postdoctoral fellowship application process. The purpose of this workshop is to discuss best practices for writing and reviewing diversity statements for academic positions.

This session is most relevant for graduate students, postdocs, and faculty. Staff who support academic searches may also find this informative.

Learning objectives:

- Reflect on ways you are committed to diversity, equity, and inclusion in your research, teaching, engagement, leadership, or other areas.

- Identify resources that allow you to participate and contribute to DEI initiatives, opportunities, projects, and research.

- Review best practices to write a diversity statement.

- Learn how to critically evaluate diversity statements.

Optional pre-workshop video, about the history of the diversity statement.

Opening Statements: Sheri Notaro, ISR Director of Diversity, Equity, and Inclusion

Workshop Facilitators:

Laura Schram – Director of Professional Development and Engagement at Rackham

Askari Rushing – DEI Certificate Academic Program Specialist

Paula Fomby – Associate Director, Population Studies Center

Equity & Inclusion in Accessible Survey Design

Scott Crawford (Founder and Chief Vision Officer, SoundRocket)

Abstract:

As we work to adapt research designs to make use of new technologies (web and smart devices), it is also important to consider how study design and survey design may impact those who rely on assistive technology. Sections 508 (covering use of accessible information and communication technology) and 501(addressing reasonable accommodation) of the Rehabilitation Act of 1973 compliance standards have been around for a long time—but the survey research industry has often taken the path providing reasonable (non-technological) accommodations for study participants. These often involve alternate modes of data collection, but rarely provide a truly equitable solution for study participation. If a web-based survey is not compliant with assistive technologies, the participant may be offered the option of completing a survey with an interviewer. Survey methodologists know well that introducing a live human interaction may change how participants respond—especially if the study involves sensitive topics. Imagine a workplace survey on Diversity, Equity, and Inclusion where a sight-impaired employee is asked to answer questions about how they are treated in their workplace, but they are required to answer these questions through an interviewer, and not privately via a website. Not only is this request not equitable for the employee (fully sighted employees get to respond more privately), it can also bias the results if the participant is not honest about the struggle for fear of receiving backlash from their employer if the interviewer passed along their frustrations. In the act of being denied equitable participation, future decisions will then be made on potentially faulty results about the experience of such people.

In this presentation, Crawford focuses on developing an equitable research design, partially through considering the overall study—not just the technology itself. But also in sharing experiences in the development of a highly accessible web-based survey that is compliant with screen reading technology (screen readers, mouse input grids, voice, keyboard navigation, etc.). He presents experimental, anecdotal, and descriptive experiences with accessible web-based surveys and research designs in higher education student, faculty, and staff surveys conducted on the topic of Diversity, Equity, and Inclusion. The results will be directly relevant for inclusion and equity in these settings as well as some surprising unintended positive consequences of some of these design decisions. Lastly, he shares some next steps for where the field may go in continuing to improve in these areas.

Representative Research: Assessing Diversity in Online Samples

Frances Barlas (Vice President, Research Methods at Ipsos Public Affairs)

Abstract:

In 2020, we saw a broader awakening to the continued systemic racism throughout all aspects of our society and heard renewed calls for racial justice. For the survey and market research industries, this has renewed questions about how well our industry does to ensure that our public opinion research captures the full set of diverse voices that make up the United States. These questions were reinforced in the wake of the 2020 election with the scrutiny faced by the polling industry and the role that voters of color played in the election. In this talk, we’ll consider how well online samples represent people of color in the United States. Results from studies that use both KnowledgePanel – a probability-based online panel – and non-probability online samples will be shared. We’ll discuss some strategies for ways to improve our sample quality.

Dr. Frances Barlas is a Senior Vice President and the lead KnowledgePanel Methodologist for Ipsos. She has worked in the survey and market research industries for 20 years. In her current role, she is charged with overseeing and advancing the statistical integrity and operational efficiency of KnowledgePanel, the largest probability-based panel in the US, and other Ipsos research assets. Her research interests focus on survey measurement and online survey data quality. She holds a Ph.D. in Sociology from Temple University.